I’ve been asked more times than I can count “What are you trying to build? You make so many different prototypes!”.

Most people probably expect a few app ideas to be thrown around - but this really isn’t the point.

I care about what it feels like to use a computer - the aesthetics & visceral feeling of it. I don’t want to build an app. I want to re-invent the way we interact with our devices & move through the world.

The software of today feels overly constrained & analytical, Apollonian in nature. The primary way we’ve built software has been using rigid math & logic that feels utterly inaccessible to anyone who isn’t deep in STEM.

This has a huge impact on the kinds of software that people create & the people who’s needs are served by technology.

Compare this to sketching a picture - I can start off with a rough idea and over time refine it. Sure, I can take an analytical approach to the composition, the ideas or even how I draw the lines. There are plenty of artists who are deeply technical and algorithmic in their work, but the point here is plurality.

The same can be said for cooking - you can be extremely rigid with timers, using measuring cups and precise recipes, or you can intuitively navigate a space and way-find through the kitchen to make a meal.

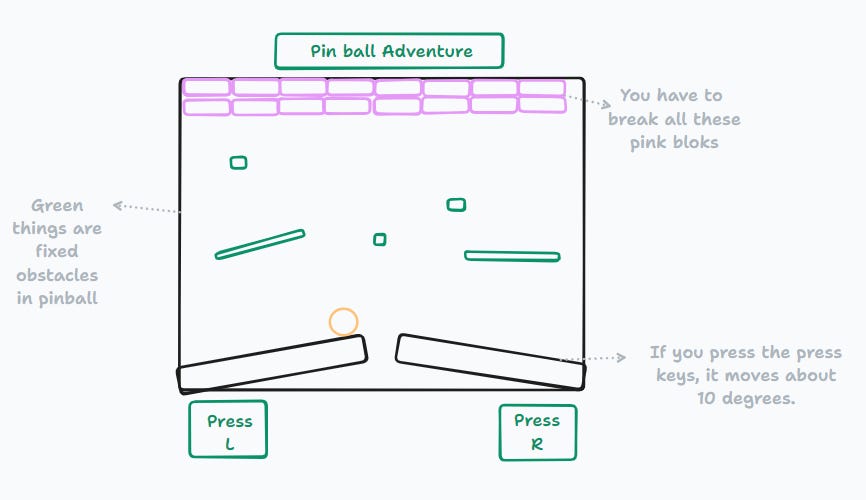

But software feels different to many other kinds of creations. An image is a single variation - possibility space collapsed into a single outcome. When you compare this to something like procedural generation, this is defining the bounds of what an image could possibly be and then you’re getting different variations every time.

Software can be viewed through many lenses, but one that I find very useful is the media of process. It isn’t just the final meal or sketch. It’s the recipe.

This is what makes software feel different to other kinds of media. In a novel I could write a string of random characters to get my point across and it’s still “correct”. Maybe not by a formal standard, but so much writing bends & breaks the rules of traditional literature in favour of expression anyway. The reader acts as a fuzzy interpreter that projects their own meaning onto the media.

In the context of programming languages, we have these rigid interpreters that are expecting very specific syntax, otherwise the entire process falls over.

This is beginning to break down with LLM’s and other AI systems. They’re able to step in and be the fuzzy interpreter, the squishy layer between the 0’s & 1’s and the person interacting with the device.

But language is not the only way we can express process! People are very visual creatures, we learn in many ways - when we see our friends doing something, the act of watching them helps us solidify the process in ourselves.

When you’re planning out some software with friends or teammates, how often do you start with a notepad or a whiteboard? Scribbling diagrams in a rough way before moving over to a codebase?

This squishy layer of communication is breaking down the rigidity of how we communicate & consume ideas on a device.

I think a good framing for this is “if you were to explain to a friend an idea, what would be the simplest way to do that?”. If you were giving them instructions on how to edit a podcast, there is no way you’d be telling them all of the timestamps of an audio file you’d like edited out.

You’d probably say something like “cut out any long pauses that don’t add to the effect of the conversation, any unnecessary uhm’s or ah’s that don’t express something useful, oh and the part where it goes off on a tangent, let’s cut that too).

This is getting pretty close to what Descript did.

AI makes this possible by transformation. It gets your fuzzy inputs and translates them into some other form. In the context of a podcast, it’s getting that description and translating it into a series of edits to the audio file.

We can use this kind of transformation on the consumption side as well. AI becomes the ultimate accessibility tool. I’m not only talking about people with physical or mental impairments, but also people with temporary accessibility needs.

I don’t know about you - but I only really listen to podcasts when I’m doing something with my hands, or I’m out and about in a way that I couldn’t just read a physical book or watch a movie. I listen when I’m on a long drive, doing some chores or going for a walk.

By giving computers the ability to translate media across modalities like this, it means that we can have media that is truely adaptive to what we’re doing so that we can consume and interact with it in more convenient ways.

When combining this with search and chatbots, it allows us to pause the media we’re listening to and ask it questions. “Oh, what did they mean by that?”, etc.

Ok so there’s a bit of handwaving going on here about what it means to translate media across modalities. Not all mediums have mathematical equivalence and even if they did, they have different affordances. A good movie is not a direct translation from a book, it’s an adaptation. Books often do a better job at being introspective where as movie’s often focus on the action & show don’t tell. You can be much more poetic with language in a book than a movie & get away with it.

Ok let’s go to a really concrete example.

When I’m trying to generate an image of a dog and I say “A dog in a hyper realistic style with dramatic lighting” that could mean any number of things. What breed is it? What pose? Are they young? old? What’s their fur like? What’s in the background? You get my point.

People like to talk in generalities and use fuzzy terms when they describe things, but often you’ll get much better results with these systems if you get more specific. So if someone doesn’t know what they mean by a dog, it becomes an exploration problem. How do you efficiently carve up the possibility space of types of dog so that the person doing the prompting can get to what they want as quickly as possible?

One solution is to show variations of dog that are maximally far apart in terms of their properties. This way the user can recursively generate variations by selecting which dog is most similar to what they want, until they hone in on what they actually want from the media or to inject randomness into the prompts.

Another solution here is personalisation, this can help carve up the possibility space so that it knows what you mean when you say “dog” or a specific character’s name. When you say “A picture of Frank eating his favourite fruit”, if an AI system has enough context about who Frank is and what his favourite fruit is, it shouldn’t be a problem for it to generate the image.

There are also social solutions to this kind of discovery problem. It isn’t by accident that Midjourney decided to launch on Discord. By being in a social setting surrounded by other people making prompts, it can inject inspiration & variation into the prompting process. It’s also the reason why so many AI image generation websites have a wall of other people’s generations on the homepage.

This brings us to the idea of decomposition and remixing.

By giving computers an ability to understand messy forms of communication it doesn’t just stop at an entire piece of media. It gives the computer an ability to understand the components of it, to break them apart or decompose them and remix them. These mood boards or collections of snippets become resources that can be harvested for use in the creative process. It’s like you’re foraging for all the things you like.

All of this is to say that if the barrier between media is being eroded by AI, then the barrier between computation and other forms of media is also being broken down. I see a future where computation finds its way into everything.

What does a fuzzy calendar or scheduling system look like that blurs the line between cron jobs / computation & traditional scheduling?

What does it mean if the physical objects in your home are imbued with computation? That trinket from a trip to the snow becomes part of a memory palace that brings up notes & memories associated with the trip with your family? Or the record collection that brings up live concerts coming in your local area or new songs released by the artist?

What does it mean when software becomes ephemeral and adaptive? Currently large scale applications and social media are ballooning into bloated messes that are trying to accomodate for the needs of billions of people. What if they didn’t need to be? What if the spaces we inhabited online were like shifting sandcastles being washed away when they were no longer needed.

Every social media platform has emphasised a specific media type. Instagram (images), OG Twitter (text), TikTok (short form video). What does it mean for a social media platform to emphasise fuzzy computation? How does this change the way we interact with each other and our devices?

Desire paths form when enough people walk over grass & erode it through repeated use. A similar thing happens as a more manual process today (e.g. the hash tag evolving from a need by users). What if that could happen automatically?

I can talk to a friend and invent arbitrary rules for logic or computation and they can usually follow along (to a certain level of complexity), this sort of interpretive computation & dynamic media is only beginning to be born.

What would it mean to be able to tell the rules of a social game like “Werewolf / Mafia” and have it create concrete computation behind the scenes and enforce the rules?

What does it mean when the primitive data structures that we are working with on computers are not rigid types, but instead amorphous piles of goop?

Most of the data created today is unstructured. We send screenshots, blobs of text in dm’s, pdf’s, have video calls, send voice notes to each other, etc. It’s telling that rather than copying text to other people we often send it as a screenshot.

For a second lets assume we even have structured data - how many times do we need to create translation methods to transform it into the correct shape for use in another part of an application? The problem with our software is at the heart of the building blocks we use.

We could create data as a messy blob of information, carve it up and transform it into the shape that we need it at the time that we need it.

Our software is rigid because our data structures are rigid.

— But they don’t have to be.